Music has a way of working on us, doesn't it? It’s an emotional architect, really. It builds the feeling of a scene long before our brains have a chance to catch up. Think about it—the swelling strings that tell you the hero is about to win, or that unnerving quiet right before a jump scare. It's the unspoken language that cues our emotions. Music isn't just filler; it’s a core part of the story.

Here’s a little thought experiment. Picture the most heart-wrenching scene from your favorite film. Now, press mute. Suddenly, that powerful dialogue feels a bit hollow, doesn't it? The big action sequence looks almost clumsy, and the emotional peak just… fizzles. This simple test proves a fundamental truth: music gives visuals the emotional weight they can't carry alone.

This sonic layer gets to work on a subconscious level, guiding how we interpret what we see and pulling us deeper into the narrative. It’s an incredibly potent tool that creators use to hit very specific emotional targets, whether in a film, a video game, or a podcast.

Music is a master multitasker. It effortlessly sets the tone, anchors us in a specific time and place, and can even hint at what’s to come. That simple, repeating piano line? It can plant a seed of unease that blossoms into full-blown dread minutes later, all before anything remotely scary happens on screen.

Let’s break down what it’s really doing:

Music is the shorthand of emotion. It bypasses the rational brain and speaks directly to the heart, making it one of the most effective tools in a storyteller's arsenal.

When all is said and done, music is what turns passive watching into an active, emotional ride. It's the invisible current that makes a simple scene unforgettable, embedding it in our memory long after the credits roll.

The jump from physical media like CDs and vinyl to on-demand streaming was way more than just a tech upgrade. It completely rewired how we connect with music.

Platforms like Spotify and Apple Music turned sound from a product you owned into something you just… access. It's like water from a tap. This shift created a world where music is a constant, personalized soundtrack for pretty much everything we do.

And that unprecedented access changed the game. We don't really listen to albums from start to finish anymore, do we? Instead, we bounce between algorithm-generated playlists, curated moods, and those never-ending artist radio stations. Music discovery, once in the hands of radio DJs and record store clerks, now belongs to complex algorithms that learn our tastes with scary accuracy.

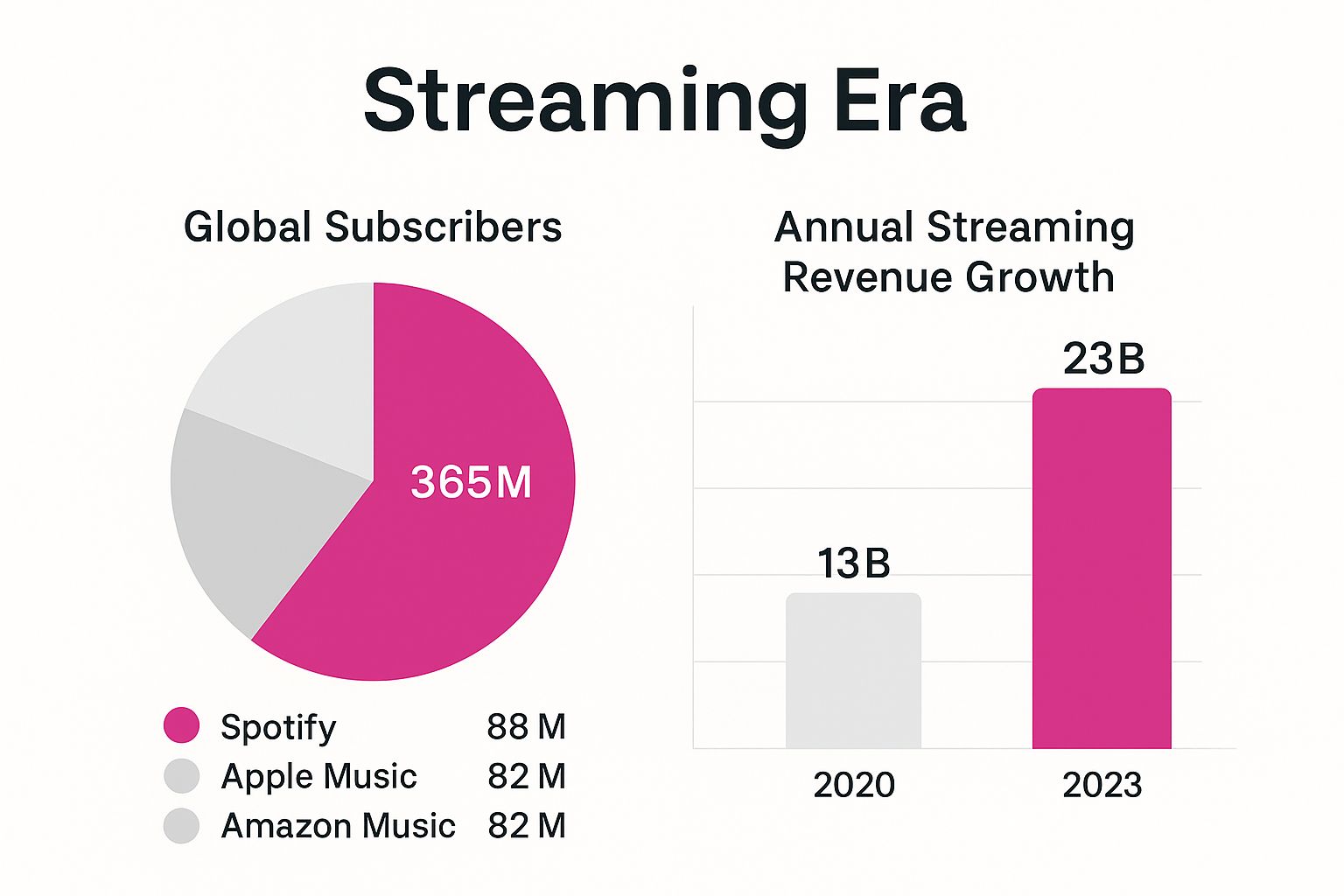

Financially, the impact has been massive. Streaming is now the undisputed engine of the modern music industry, and the numbers showing its scale are pretty wild.

This visual gives you a sense of just how big the major platforms are and how quickly the industry's revenue has exploded.

As you can see, it's not just about the huge user bases. The financial growth in just a few years has been staggering.

This new reality has huge implications for how music in the media is picked and heard. With millions of tracks just a click away, the fight for a listener's attention is brutal. A song has to grab you instantly, whether it’s in a TikTok video, a Netflix show, or a car commercial.

The "skip" button is the ultimate gatekeeper. If a track doesn't hit hard in the first few seconds, it’s probably getting skipped and forgotten.

This modern listening habit puts a ton of pressure on production quality. Your mix and master have to sound powerful and clear on everything—from high-end studio monitors to, more realistically, car stereos and cheap earbuds.

For artists and producers, this means getting a handle on an effective audio mastering chain isn't just a "nice to have." It's a matter of survival.

The numbers behind this shift are mind-boggling. By early 2025, the global music streaming market hit a valuation of $46.7 billion, a massive leap from $34.5 billion in 2022.

This growth is fueled by a constantly growing subscriber base that reached 667 million people worldwide in 2023. In a market like the U.S., streaming now pulls in about 84% of all recorded music revenues, cementing its spot as the industry's absolute backbone. You can dig into more of these music streaming trends on sxmbusiness.com.

Music is a true chameleon. It completely changes its skin to fit whatever medium it's in. Think about it—the same song can feel totally different in a movie trailer versus a TV commercial. Why? Because its job has changed.

It's never just background noise. Music is a precision tool, used intentionally to get a specific reaction. A one-size-fits-all approach to audio just doesn't cut it. The emotional punch you need in a 30-second ad is a world away from the slow-burning tension you'd build in a horror film. Understanding these different roles is the first step to really grasping the power of music in the media.

In film, the score is like an invisible character whispering in your ear, telling you how to feel. Composers use those huge, sweeping orchestral pieces to give you a sense of epic scale, or they'll use jarring, dissonant strings to crank up the suspense until you can't take it anymore.

Advertising plays a totally different game. It’s all about creating an instant connection and drilling a brand into your memory. A catchy, upbeat jingle isn't just there to be fun; it's designed to burrow into your brain so you associate a good feeling with a product. It's about efficiency and recall, not a slow, unfolding story.

Then you have video games, which are another beast entirely. Game music has to be both immersive and dynamic, often reacting in real-time to what you do. An ambient, exploratory theme might instantly flip into a high-energy combat track the second an enemy pops up. It makes you feel like the conductor of your own experience.

Music in media isn’t just about filling silence. It's about targeted emotional engineering, where every note has a job to do, whether that's building a world, selling a product, or heightening a feeling.

While streaming is how most of us listen to music these days, getting a song placed in media is a massive—and often overlooked—revenue stream for artists. This is where synchronization licensing (or "sync" for short) comes into play. It's the process of getting permission to pair a song with visual media.

In 2024, streaming formats made up a whopping 69% of total global recorded music revenue. But don't sleep on sync licensing. It still pulled in $650 million for films, TV, ads, and games. For an independent artist, landing a single sync placement in a popular Netflix show or a car commercial can literally be a career-making moment.

Of course, for brands trying to find the perfect track, navigating this world can be tricky. That's why many find that partnering with a specialized music influencer agency helps them cut through the noise and find the right fit.

To really see how different the strategies are, let's break down music's primary goals and emotional impact across the most common channels. The same core element—music—is bent and shaped to serve completely different masters.

Each channel demands a different creative approach, not just from the composer but from the artist as well.

Understanding these distinctions is everything for creators. If you're an artist hoping to get your music placed in one of these channels, you'll need to know how to promote music online with these specific use cases in mind. It's not just about making a great track; it's about understanding where that track can live and what job it can do.

Music’s power to grab hold of our emotions can feel like pure magic, but it’s actually rooted in predictable psychological triggers. Our brains are simply hardwired to react to sound, and savvy media creators know exactly how to pull those strings. It’s a lot like a chef using specific spices to guarantee a certain flavor; composers use musical ingredients to cook up precise feelings.

This often works through a process called emotional contagion, where we find ourselves unconsciously mirroring the emotion baked into a piece of music. A frantic, high-energy track gets your heart racing. A slow, mournful melody can make you feel genuinely down. It’s a gut reaction that bypasses rational thought and plugs directly into our primal responses.

So, how does a simple collection of notes pull this off? Composers lean on a shared musical vocabulary that our brains have learned to interpret over centuries. This is the secret language behind how music in the media can direct an audience's entire experience without a single word being spoken.

Just think about these common associations we all understand instantly:

These aren't just artistic whims; they're calculated psychological cues. When a filmmaker wants you to feel a character's internal turmoil, they don't need dialogue. A hesitant piano melody in a minor key will do the job perfectly.

Our brains don't just hear music; they feel it. We physically and emotionally internalize its rhythm, pitch, and volume, allowing it to become a direct line to our nervous system.

Beyond those in-the-moment reactions, music has a ridiculously powerful connection to memory. This is known as memory association. When a song is paired with a huge moment on screen, it becomes a permanent anchor for that memory and all the feelings that came with it.

It's why a theme song can instantly throw you back into the world of a movie. The heroic fanfare for Star Wars or the swashbuckling theme from Indiana Jones doesn't just remind you of the films; it makes you feel the rush of excitement and adventure all over again. Creators use this to forge deep, lasting bonds between their stories and their audience.

Look, getting a track to sound amazing in your studio is a huge win. But that's really only half the battle. The other half—the part that really matters—is making sure it sounds just as good everywhere else.

This is where audio mastering comes in. Think of it as the final quality check, the last coat of polish before your music hits the world. It’s what separates a professional, consistent-sounding track from one that falls flat, whether it's booming in a cinema or playing through a cheap pair of laptop speakers.

Without a solid master, that perfect mix you spent weeks on can completely fall apart. The bass might vanish, the vocals could get buried, or the whole thing might just sound thin and weak next to other songs. And with the wild variety of ways people listen to music today, this is a bigger problem than ever.

Let's face it: the way we listen to music in the media is all over the place. One fan might be using top-of-the-line noise-canceling headphones on a flight. Another is blasting your track from a tiny Bluetooth speaker at a bonfire. Each of those scenarios presents its own unique acoustic nightmare that can totally change how your music is perceived.

Just think about the most common ways people listen:

A great master doesn’t just make a song louder; it makes the song translatable. It ensures the core emotional impact of the track survives the journey from the studio to the listener's ears, no matter the destination.

People are listening more than ever, too. The average listener now spends about 18.4 hours per week tuned in. With streaming services—both subscription and ad-supported—accounting for over 30% of all music listening, your track is guaranteed to be heard in a ton of different situations.

So, how do you prepare for this? Mastering engineers focus on a few key things, like dynamic range (the gap between the quietest and loudest parts) and equalization (EQ) (the art of balancing frequencies). A track built for a dance club needs a completely different dynamic feel than one made for a quiet, dramatic movie scene.

By the way, if your projects involve putting together different audio pieces for a specific platform, knowing how to efficiently combine sound files is a game-changer. It’s a fundamental skill that can seriously streamline your mastering workflow.

Ultimately, mastering is all about anticipating how and where your music will be heard. When you optimize your audio for every possible scenario, you’re protecting your creative vision and making sure your message lands with full impact, every single time.

Here's the rewritten section, crafted to match the human-written style of the provided examples.

We've covered how music can hook an audience emotionally, drive a story, and why the technical details matter. But the world of sound doesn't sit still. The future of music in the media is leaning into experiences that are deeper and more interactive than ever before. Technology isn't just changing how we listen; it's fundamentally changing what music can do.

Trends that sounded like something out of a sci-fi movie a few years ago are now becoming real, practical tools that creators are starting to get their hands on.

The next big wave in audio is all about making things personal and immersive. These aren't just minor tweaks—they're giving artists and producers entirely new ways to connect with people.

Here are a couple of the big ones to watch:

The future of audio isn't static anymore. Sound is becoming a dynamic, adaptive layer that responds to you and your environment. Media is about to get a whole lot more engaging.

On top of that, immersive spatial audio formats like Dolby Atmos are breaking out of high-end cinemas and landing straight into our headphones and living rooms. This tech lets you place individual sounds in a three-dimensional space all around the listener, creating a wild sense of realism.

As these tools become easier for everyone to use, nailing the fundamentals becomes even more critical. All this shiny new tech is built on the same foundation: the art and science of great sound.

Diving into the world of music for media can feel like learning a new language, filled with strange job titles and rules that aren't always obvious. Let's break down a few of the most common questions that come up.

Getting these basics down is a game-changer, whether you're a filmmaker hunting for that perfect song or a musician trying to get a track placed.

Think of a composer as the person who builds the sonic world from the ground up. They're the ones writing original, custom music—the "score"—specifically for a film, show, or game. That sweeping orchestral theme in an epic movie? The tense, moody synth track in a thriller? That's the composer's craft.

A music supervisor, on the other hand, is more of a curator and a deal-maker. Their job is to find and license pre-existing songs. They're the ones who track down that cool indie band track for a montage or clear a classic hit for the end credits. It's a logistical puzzle that involves negotiating two separate licenses for every single song:

The short version? Composers create. Supervisors license.

This is a big one. To legally use a copyrighted song in any project—from a quick YouTube video to a full-blown feature film—you absolutely have to get the right permissions. Skip this step, and you're walking straight into copyright strikes, takedowns, or even a lawsuit.

For major productions, that means chasing down both the sync and master licenses we just talked about. But for most creators and indie producers, there's a much saner path: royalty-free music libraries. Services like Epidemic Sound or Artlist have built massive catalogs where a single subscription gives you the green light to use their music without all the legal back-and-forth.

For independent creators, using royalty-free or Creative Commons music is the smartest, safest way to add amazing audio to your work without getting tangled up in a web of complex licensing deals.

Ever notice how a track that slaps on your headphones can sound flat or muddy in a car? You're not imagining it. A car cabin is an acoustic nightmare. It's a weird mix of reflective glass, sound-swallowing seats, and speakers stuck in odd places.

This wacky environment creates all sorts of problems, especially phase issues. This is where certain bass frequencies literally cancel each other out, making your low end sound weak or just disappear. It’s like the sound waves are fighting each other, and your kick drum is losing.

On top of that, you've got constant competition from road noise and engine hum. If a track isn't mastered to cut through that mess, it gets lost. Vocals become hard to understand, the bassline vanishes, and the whole thing just loses its punch. The next thing you know, the listener's thumb is hitting the skip button.

Don't let a terrible listening environment kill your track's vibe. CarMaster was built specifically to solve this problem. We master your music to sound huge and clear inside a car, while making sure it still translates perfectly to headphones and studio monitors. Protect your music from the skip button and get a pro-level, car-ready master in minutes.